Linear Algebra

Linear Algebra¶

Linear algebra is the branch of linear equations mathematics that uses vector space and matrices.

The two primary mathematical entities that are of interest in linear algebra are the vector and the matrix. They are examples of a more general entity known as a tensor. Tensors possess an order (or rank), which determines the number of dimensions in a matrix required to represent it.

Vectors: A set of numerical elements of a particular size and one-dimensional. For example:

numpy is the best library to work with these data structures. Some examples for generating random vectors are:

import numpy as np

# Vector of 10 ones

a1 = np.ones(10)

print(a1)

# Vector of 10 numbers that have the same distance from each other, starting with 3 and ending with 15

a2 = np.linspace(3, 15, 10)

print(a2)

# Vector of 10 random numbers between [0, 1)

a3 = np.random.rand(10)

print(a3)

# Vector of 10 random numbers between [1, 10)

a4 = np.random.randint(1, 10, size=10)

print(a4)

Matrix: A set of vectors that form a rectangular structure with rows and columns. For example:

With numpy you can also generate arrays. Some examples of how to generate them are:

# 5x5 Identity matrix (5 rows, 5 columns)

m1 = np.identity(5)

print(m1)

# 3x2 (3 rows, 2 columns) matrix with random numbers between [0, 1)

m2 = np.random.rand(3, 2)

print(m2)

# 7x5 matrix (7 rows, 5 columns) with random numbers between 5 and 19

m3 = np.random.randint(5, 19, size=(7, 5))

print(m3)

Tensors: Tensors are a matrix of numbers or functions that transmute with certain rules when the coordinates change. Usually, the work with tensors is more advanced and requires more Machine Learning and model-oriented libraries like TensorFlow or PyTorch.

Machine Learning is the contact point for Computer Science and Statistics.

Vectors¶

Since scalars exist to represent values, why are vectors necessary? One of the main use cases for vectors is to represent physical quantities that have both a magnitude and a direction. Scalars are only capable of representing magnitudes.

For example, scalars and vectors encode the difference between the speed of a car and its velocity. The velocity contains not only its speed, but also its direction of travel.

In Machine Learning, vectors often represent feature vectors, with their individual components specifying how important a particular feature is. Such features might include the relative importance of words in a text document, the intensity of a set of pixels in a two-dimensional image, or historical price values for a representative sample of financial instruments.

Scalar Operations

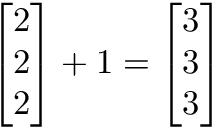

Scalar operations involve a vector and a number.

# Adding a scalar and a vector. In the following example, each element of the vector will have a 2 added to it

a1 = np.array([1, 2, 3, 4])

print(a1 + 2)

# Subtract a scalar and a vector. In the following example, each element of the vector will have a 1 subtracted from it

print(a1 - 1)

# Multiplying a scalar and a vector. In the following example, each element of the vector will be multiplied by 10

print(a1 * 10)

# Dividing a scalar and a vector. In the following example, each element of the vector will be divided by 5

print(a1 / 5)

Vector multiplication

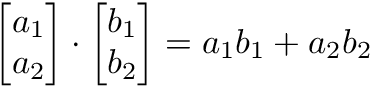

There are two types of vector multiplication: dot product and Hadamard product.

The dot product of two vectors is a scalar. The dot product of vectors and matrices (matrix multiplication) is one of the most important operations in deep learning.

a1 = np.array([1, 2, 3, 4])

a2 = np.linspace(0, 20, 4)

a1.dot(a2)

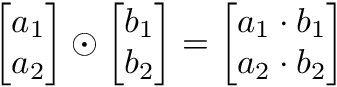

The Hadamard product is a multiplication by elements that generates a vector.

a1 = np.array([1, 2, 3, 4])

a2 = np.array([10, 10, 10, 10])

a1 * a2

Matrices¶

A matrix is a rectangular grid of numbers or terms (like an Excel spreadsheet) with special rules for addition, subtraction, and multiplication.

Matrix dimensions: We describe the dimensions of a matrix in terms of rows by columns.

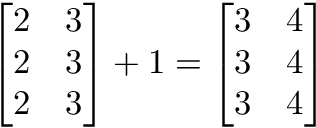

Scalar matrix operations

Scalar operations with matrices work the same way as with vectors.

You simply apply the scalar to each element of the matrix - add, subtract, divide, multiply, etc.

# Add a scalar and a matrix (in this case of 2 rows and 4 columns). In the following example, each element of the matrix will have a 10 added to it

m1 = np.array([[1, 2, 3, 4], [5, 6, 7, 8]])

print(m1 + 10)

# Subtract a scalar and a matrix. In the following example, each element of the matrix will have a 5 subtracted from it

print(m1 - 5)

# Multiplying a scalar and a matrix. In the following example, each element of the matrix will be multiplied by 3

print(m1 * 3)

# Dividing a scalar and a matrix. In the following example, each element of the matrix will be divided by 2

print(m1 / 2)

Operations with matrix elements

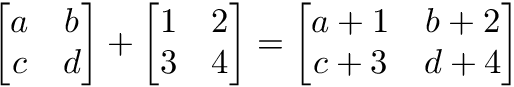

To add, subtract or divide two matrices they must have the same dimensions. We combine the corresponding values in an elementary way to produce a new matrix.

m1 = np.array([[1, 2, 3, 4], [5, 6, 7, 8]])

m2 = np.array([[2, 4, 6, 8], [-1, -2, -3, -4]])

# Add two matrices. They must have the same dimension (same number of rows and columns). The elements in the same position as the first matrix and the second matrix are added together

print(m1 + m2)

# Subtract two matrices. They must have the same dimension (same number of rows and columns). The elements in the same position as the first matrix and the second matrix are subtracted

print(m1 - m2)

# Split two matrices. They must have the same dimension (same number of rows and columns). The elements in the same position as the first matrix and the second matrix are divided

print(m1 / m2)

Matrix multiplication

There are two types of matrix multiplication: dot product and Hadamard product.

The dot product of two matrices is a matrix with the number of rows in the first matrix and the number of columns in the second matrix. There must be equality between the number of columns in the first and the number of rows in the second. That is, if matrix "A" has dimensions of x (5 rows and 3 columns) and matrix "B" has dimensions of x (3 rows and 2 columns), the resulting matrix after multiplying them will have dimensions of x (and they can be multiplied because the first one has 3 columns and the second one has 3 rows).

In the dot product of matrices, the element of the product matrix is obtained by multiplying each element of row of matrix "A" by each element of column of matrix "B" and adding them together.

m1 = np.array([[1, 2, 3, 4], [5, 6, 7, 8]])

m2 = np.array([[2, 4], [6, 8], [-1, -2], [-3, -4]])

m1.dot(m2)

The Hadamard product of matrices is an elementary operation. Positionally corresponding values are multiplied to produce a new matrix.

m1 = np.array([[1, 2, 3, 4], [5, 6, 7, 8]])

m2 = np.array([[2, 4, 6, 8], [-1, -2, -3, -4]])

m1 * m2

Matrix transpose

Matrix transposing provides a way to "rotate" one of the matrices so that the operation meets the multiplication requirements and can continue.

There are two steps to transpose a matrix:

- Rotate the matrix 90° to the right.

- Reverse the order of the elements in each row (e.g., becomes ).

m1 = np.array([[1, 2, 3, 4], [5, 6, 7, 8]])

m1.T

m1.transpose()

Applications of linear algebra¶

Linear algebra has a direct impact on all processes involving data processing:

- The data in a dataset is vectorized. Rows are inserted into a model one at a time for easier and more authentic calculations.

- All images have a tabular (matrix) structure. Image editing techniques, such as cropping and scaling, use algebraic operations.

- Regularization is a method that minimizes the size of coefficients while inserting them into the data.

- Deep learning works with vectors, matrices and even tensors, as it requires aggregated and multiplied linear data structures.

- The 'One hot encoding' method encodes categories to facilitate algebraic operations.

- In linear regression, linear algebra is used to describe the relationship between variables.

- When we find irrelevant data, we usually eliminate redundant columns, so PCA works with matrix factorization.

- With the help of linear algebra, recommender systems can have more refined data.