Connecting to SQL Databases

Connecting to SQL databases using Python¶

As Machine Learning experts and depending on how the information is arranged, we will probably have to interact with SQL databases. It is a very common flow to ingest data in Python through SQL or non-relational databases and use Python to analyze it, graph it, define it and ultimately build a predictive model.

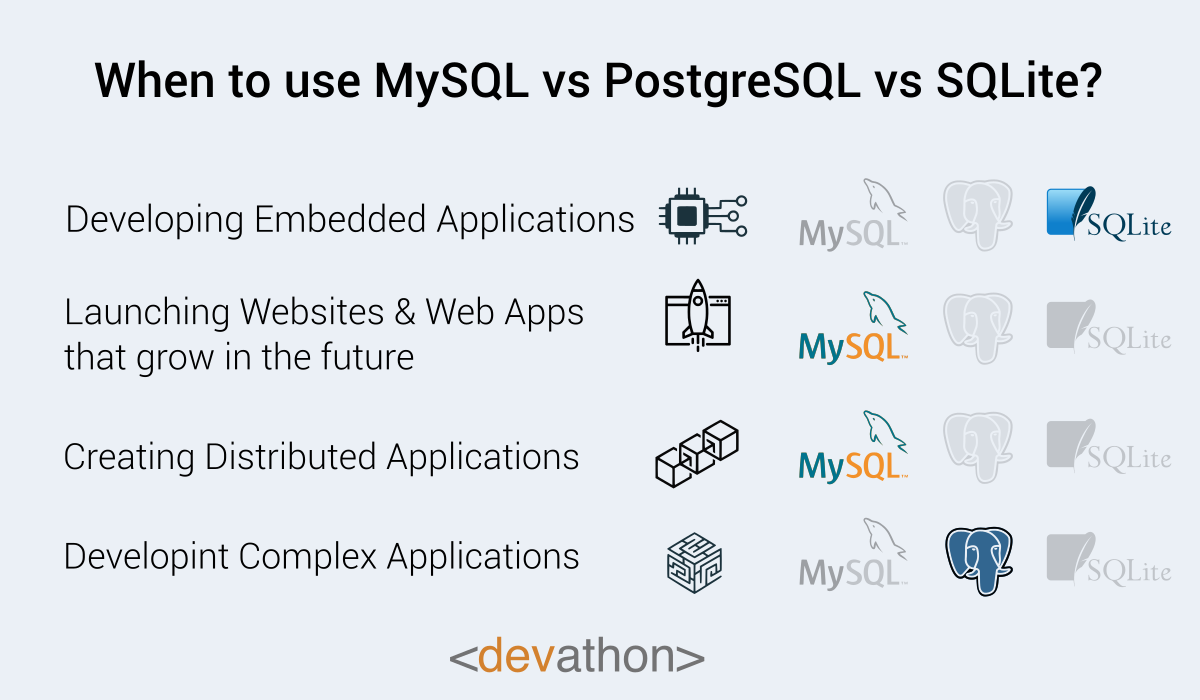

Some of the most commonly used SQL databases are SQLite, PostgreSQL and MySQL. SQLite is the best alternative when we want a portable database, without having to use a separate server to run it. It is ideal for embedded applications. If we want something more scalable in the future and a database for distributed applications, then MySQL is a good choice, while PostgreSQL is an opportune database for complex applications and is used in most enterprise solutions.

In this lesson, we will see how Python and some SQL databases interact. Why should we care about connecting Python and a SQL database? Perhaps, as Machine Learning experts, we need to build an automated ETL pipeline. Connecting Python to a SQL database will allow us to use Python for its automation capabilities. We will also be able to communicate between different data sources. We won't have to switch between different programming languages, we will be able to use our Python skills to manipulate data from an SQL database, Furthermore, we won't need a CSV file.

SQLite connection¶

Python has its own wrapper to make a connection to a SQLite database. Also, this package comes installed in the base version, and you don't need to install anything to start using it. Because the database is simple, so is its code:

import sqlite3

import pandas as pd

# Connect to the database or create it if it doesn't exist

con = sqlite3.connect("test.db")

### CREATE: Generate a table named COMPANY with 5 columns: ID, NAME, AGE, ADDRESS and SALARY

con.execute("""CREATE TABLE COMPANY (

ID INT PRIMARY KEY NOT NULL,

NAME TEXT NOT NULL,

AGE INT NOT NULL,

ADDRESS CHAR(50),

SALARY REAL

)""")

### INSERT: Insert records into the COMPANY table

con.execute("""INSERT INTO COMPANY VALUES (1, 'Richard', 40, 'Spain', 20000.00)""")

con.execute("""INSERT INTO COMPANY VALUES (2, 'Óscar', 25, 'France', 15000.00)""")

con.commit()

### SELECT: Filter and print some of the table values

# The cursor allows you to define a set of results

cursor = con.execute("SELECT * from COMPANY")

for row in cursor: # Iterate through all the rows of the resulting filter

print(f"ID = {row[0]} NAME = {row[1]} ADDRESS = {row[2]} SALARY = {row[3]}")

# The results can also be stored in a DataFrame using Pandas

cursor_df = pd.read_sql_query("SELECT * FROM COMPANY", con)

### UPDATE: Update a record in the COMPANY table

con.execute("UPDATE COMPANY set SALARY = 25000.00 where ID = 1")

con.commit()

### DELETE: Delete a record from the COMPANY table

con.execute("DELETE from COMPANY where ID = 2")

con.commit()

### Terminate database connection

con.close()

As you can see, this package provides a very friendly interface to create a portable and easy to use database. With just two statements, con.execute() and con.commit() we can do almost everything we need to interact with it. In contrast to SQLAlchemy, here it is necessary to know SQL syntax.

Connecting to MySQL¶

As a primary language to analyze data, Python also has its own wrapper to make a connection to a MySQL database, PyMySQL. Since it is a proprietary database, it requires prior installation on a server or operating system and its instantiation to be able to close the connection and be able to use it.

Connection to the database¶

MySQL itself will allow us to create a database by simply clicking on a button and entering a name. This database can then be referenced when establishing the connection. If we wish to use Python to create a database, we would simply omit the parameter in the call, as we will see below.

1. Database already created previously¶

In this case, we enter the information as follows to establish the connection.

import pymysql

con = pymysql.connect(host = "localhost",

user = "root",

password = "pass",

db = "my_database", # Name of the database if we have created it before

)

2. Database not created¶

In this case, we simply remove the db argument from the call and execute the creation statement. To do this, we use the cur.execute() statement (this package requires a cursor to be defined for each call) and then select it with con.select_db():

import pymysql

# Connect to MySQL server

con = pymysql.connect(host='localhost',

user='root',

password='password')

# Create a cursor object

cur = con.cursor()

# Execute SQL query to create a new database

database_name = 'my_database'

cur.execute(f"CREATE DATABASE {database_name}")

# Switch to the newly created database

cur.execute(f"USE {database_name}")

# Creating a new table

cur.execute("""

CREATE TABLE IF NOT EXISTS COMPANY (

ID INT PRIMARY KEY NOT NULL,

NAME TEXT NOT NULL,

AGE INT NOT NULL,

ADDRESS CHAR(50),

SALARY REAL

)

""")

# Close cursor and connection when we are done working

cur.close()

con.close()

Using the database¶

The execution of the statements that we already know about the database is the same as in the case of SQLite, with the cur.execute() and con.commit() functions, but with the difference that the execution is done with the cursor and not with the connection itself.

### INSERT: Insert a record into the database

cur.execute("""INSERT INTO COMPANY VALUES (1, 'Richard', 40, 'Spain', 20000.00)""")

cur.execute("""INSERT INTO COMPANY VALUES (2, 'Óscar', 25, 'France', 15000.00)""")

con.commit()

### SELECT: Filter and print some of the table values

# The cursor allows you to define a set of results

cur.execute("SELECT * from COMPANY")

rows = cur.fetchall()

for row in rows: # Iterate through all the rows of the resulting filter

print(f"ID = {row[0]} NAME = {row[1]} ADDRESS = {row[2]} SALARY = {row[3]}")

# The results can also be stored in a DataFrame using Pandas

import pandas as pd

cursor_df = pd.read_sql("SELECT * FROM COMPANY", con)

### UPDATE: Update a database record

cur.execute("UPDATE COMPANY set SALARY = 25000.00 where ID = 1")

con.commit()

### DELETE: Delete one or more records from the database

cur.execute("DELETE from COMPANY where ID = 2")

con.commit()

### Terminate database connection

cur.close()

con.close()

Connecting to PostgreSQL¶

As a third alternative, to connect to PostgreSQL using Python there is also a library, Psycopg2. It is the most known and used wrapper, although there are others as well. This package keeps the essence of PyMySQL statements, requiring for its use the connection and cursor objects. The connection is also very simple, and we can either create a database with Python or connect directly to it through the package.

Database connection¶

PostgreSQL itself will allow us to create a database by simply clicking a button and entering a name. This database can then be referenced when establishing the connection. If we wish to use Python to create a database, we would simply omit the parameter in the call, as we will see below.

1. Database already created previously¶

In this case, we enter the information as follows to establish the connection.

import psycopg2

con = psycopg2.connect(host = "localhost",

user = "root",

password = "pass",

db = "my_database", # Name of the database if we have created it before

)

2. Database not created¶

In this case, we simply remove the db argument from the call and execute the creation statement. For this, we use the cur.execute() and con.commit() statements:

import psycopg2

# Connect to PostgreSQL server

con = psycopg2.connect(

host="localhost",

user="root",

password="pass"

)

# Create a cursor object

cur = con.cursor()

# Create a new database

cur.execute("CREATE DATABASE your_database_name")

con.commit()

# Create the COMPANY table

cur.execute("""CREATE TABLE COMPANY

(ID INT PRIMARY KEY NOT NULL,

NAME TEXT NOT NULL,

AGE INT NOT NULL,

ADDRESS VARCHAR(50),

SALARY REAL)""")

con.commit()

# Close the cursor and connection

cur.close()

con.close()

Using the database¶

The execution of the sentences that we already know about the database is the same as in the case of MySQL, with the cur.execute() and con.commit() functions.

### INSERT: Insert a record into the database

cur.execute("""INSERT INTO COMPANY VALUES (1, 'Richard', 40, 'Spain', 20000.00)""")

cur.execute("""INSERT INTO COMPANY VALUES (2, 'Óscar', 25, 'France', 15000.00)""")

con.commit()

### SELECT: Filter from database values

cur.execute("SELECT * from COMPANY")

rows = cur.fetchall()

for row in rows: # Iterate through all the rows of the resulting filter

print(f"ID = {row[0]} NAME = {row[1]} ADDRESS = {row[2]} SALARY = {row[3]}")

con.commit()

# The results can also be stored in a DataFrame using Pandas

import pandas as pd

cursor_df = pd.read_sql("SELECT * FROM COMPANY", con)

### UPDATE: Update a database record

cur.execute("UPDATE COMPANY set SALARY = 25000.00 where ID = 1")

con.commit()

### DELETE: Delete one or more records from the database

cur.execute("DELETE from COMPANY where ID = 2")

con.commit()

### Terminate database connection

cur.close()

con.close()

Although in this lesson we have exemplified the code for the three packages, it is remarkable that the syntax is practically the same, especially in the case of PyMySQL and Psycopg2. These codes will serve as a guide to quickly learn how to interact with the most used relational databases in the world of data analysis.