Machine Learning Basics

Introduction to Machine Learning¶

Definition¶

Machine Learning is a branch of Artificial Intelligence that focuses on building systems that can learn from data, rather than just following explicitly programmed rules.

Machine Learning systems use algorithms to analyze data, learn from it and then make predictions, rather than being programmed specifically to perform the task. For example, a Machine Learning model could be trained to recognize cats by being provided with thousands of images with and without cats. Given enough examples, the system "learns" to distinguish the defining characteristics of a cat, so it can identify them in new images it has never seen before.

Types of learning¶

Depending on how the model can learn from the data, there are several types of learning:

Supervised learning¶

Models are trained on a labeled dataset. A labeled dataset is a dataset that contains both the input data and the correct answers, also known as labels or target values.

The goal of supervised learning is to learn a function that transforms the inputs into outputs. Depending on the type of output we want the model to be able to generate, we can divide models into several types:

- Regression. When the label or target value is a continuous number (such as the price of a house), the problem is considered a regression problem. The model must return a number on an infinite scale.

- Classification. When the label is categorical (such as predicting whether an email is spam or not), the problem is a classification problem. The model must return a label according to whether it corresponds to one class or another.

Some examples of models based on this type of learning are:

- Logistic and linear regression.

- Decision trees.

- Naive Bayes classifier.

- Support Vector Machines.

Unsupervised learning¶

In this type of learning, as opposed to the previous one, models are trained using an unlabeled data set. In this type of learning, the objective is to find hidden patterns or structures in the data.

Since in this type of learning there are no labels, the models must discover the relationships in the data by themselves.

Some examples of models based on this type of learning are:

- Clustering.

- Dimensionality reduction.

Reinforcement learning¶

In this learning, the model (also called agent) learns to make optimal decisions through interaction with its environment. The goal is to maximize some notion of cumulative reward.

In reinforcement learning, the agent takes actions, which affect the state of the environment, and receives feedback in the form of rewards or penalties. The goal is to learn a strategy to maximize its long-term reward.

An example of this type of learning is a program that learns to play chess. The agent (the program) decides what move to make (the actions of moving pieces) in different positions on the chess board (the states) to maximize the chance of winning the game (the reward).

This type of learning is different from the previous two. Instead of learning from a set of data with or without labels, reinforcement learning is focused on making optimal decisions and learning from the feedback of those decisions.

Data sets¶

Data is a fundamental building block in any Machine Learning algorithm. Without them, regardless of the type of learning or model, there is no way to start any learning process.

A dataset is a collection that is usually represented in the form of a table. In this table, each row represents an observation or instance, and each column represents a characteristic, attribute or variable of that observation. This data set is used to train and evaluate models:

- Model training. A Machine Learning model learns from a training data set. The model trains by adjusting its parameters internally.

- Model evaluation. Once the model has been trained, an independent test dataset is used to evaluate its performance. This dataset contains observations that were not used during training, allowing an unbiased assessment of how the model is expected to make predictions on new data.

In some situations, a validation set is also used, which is used to evaluate the performance of a model during training. Once models are trained, they are evaluated on the validation set to select the best possible model.

Division of the dataset¶

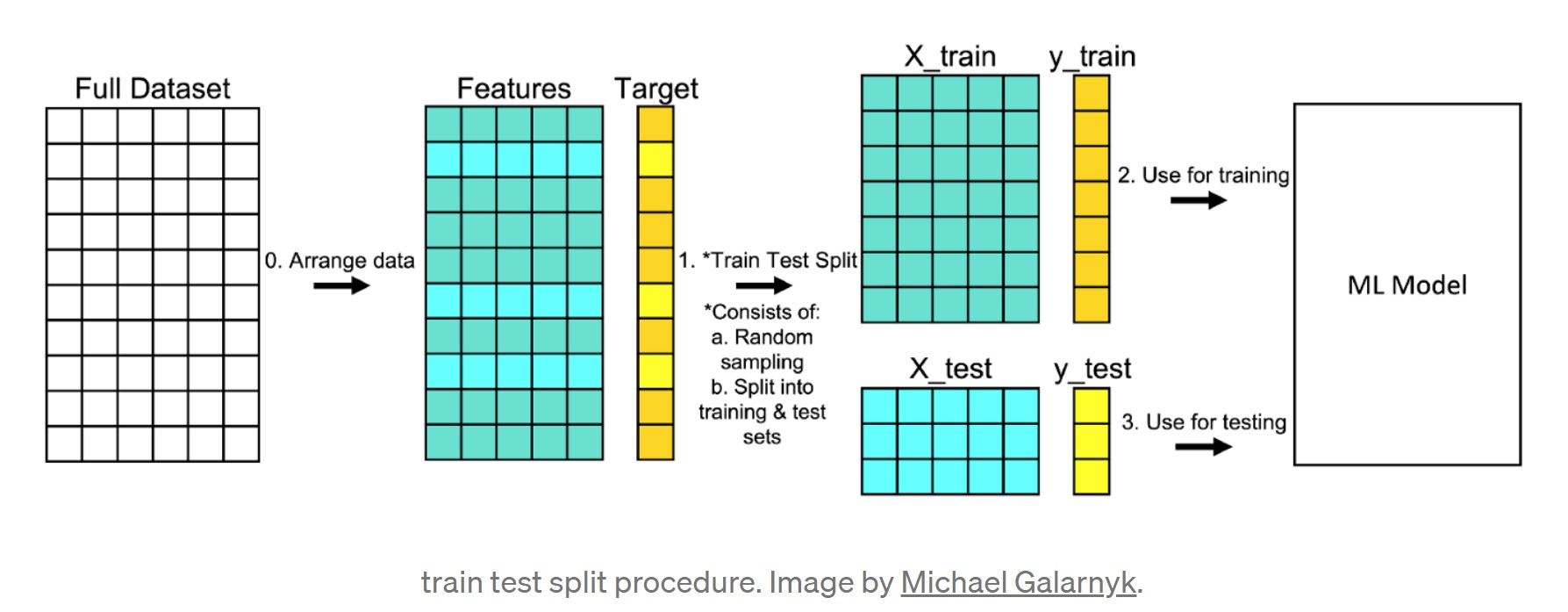

The preliminary step of training a model, in addition to EDA, is to divide the data into a training dataset (train dataset) and a test dataset (test dataset), in a procedure like the following:

- Make sure your data is organized in an acceptable format. If we are working with text files, they should be in table format, and if we are working with images, the actual documents themselves.

- Divide the data set into two parts: a training set and a test set. We will randomly select 80% (may vary) of the rows and place them in the training set, with the remaining 20% in the test set. In addition, we must divide the predictors of the classes, forming 4 elements:

X_train,y_train,X_test,y_test. - Train the model using the training set (

X_train,y_train). - Test the model using the test set (

X_test,y_test).

import seaborn as sns

from sklearn.model_selection import train_test_split

# We load the data set

total_data = sns.load_dataset("attention")

features = ["subject", "attention", "solutions"]

target = "score"

# We separate the predictors from the label

X = total_data[features]

y = total_data[target]

# We divide the sample into train and test at 80%

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state = 42, train_size = 0.80)

Model overfitting¶

Overfitting occurs when the model is trained with a lot of data. When a model is trained with so much data, it starts to learn from noise and inaccurate data inputs from our dataset. Because of this, the model does not return an accurate output. Combating overfitting is an iterative and experience-derived task for the developer, and we can start with:

- Performing a correct EDA, selecting meaningful values and variables for the model following the "less is more" rule.

- Simplifying or changing the model we are using.

- Use more or less training data.

Detecting whether the model is overfitting the data is also a science, and we can determine this if the model metric in the training data set is very high, and the metric in the test set is low.

Conversely, if we have not trained the model sufficiently, we can also see this by simply comparing the training and test metrics, such that if they are relatively equal and very high, our model is most likely not fitting our training data well.